Large Language Models (LLMs) have emerged as a revolutionary force in the world of artificial intelligence. These models, equipped with a vast understanding of human language, have the potential to transform how we interact with technology and information. In this article, we will delve into what LLMs are, why they exist, their applications, and the essentials of developing them.

Understanding Large Language Models (LLMs)

Large Language Models, often referred to as LLMs, are advanced AI systems capable of understanding, generating, and responding to human language in a remarkably natural manner. These models, powered by immense datasets and advanced machine learning techniques, can comprehend context, sentiment, and even generate coherent text that is often indistinguishable from human-written content.

Inside ChatGPT's brain. (serokell.io/blog/language-models-behind-chatgpt)

The Necessity and Applications of LLMs

LLMs are a response to the growing need for AI that can handle natural language more effectively. They find applications in a wide array of fields, from content generation and translation to chatbots and sentiment analysis. Their ability to process and generate human-like text makes them invaluable for tasks that require nuanced language understanding.

Developing LLMs: A Multi-faceted Approach

Developing an LLM requires a fusion of data, computational power, and algorithmic expertise. A massive amount of diverse, high-quality training data is essential to ensure the model learns the intricacies of human language. Powerful hardware and specialized software are necessary for the complex training process. Algorithmic advancements, like those found in transformer architectures, play a pivotal role in enhancing the model’s capabilities.

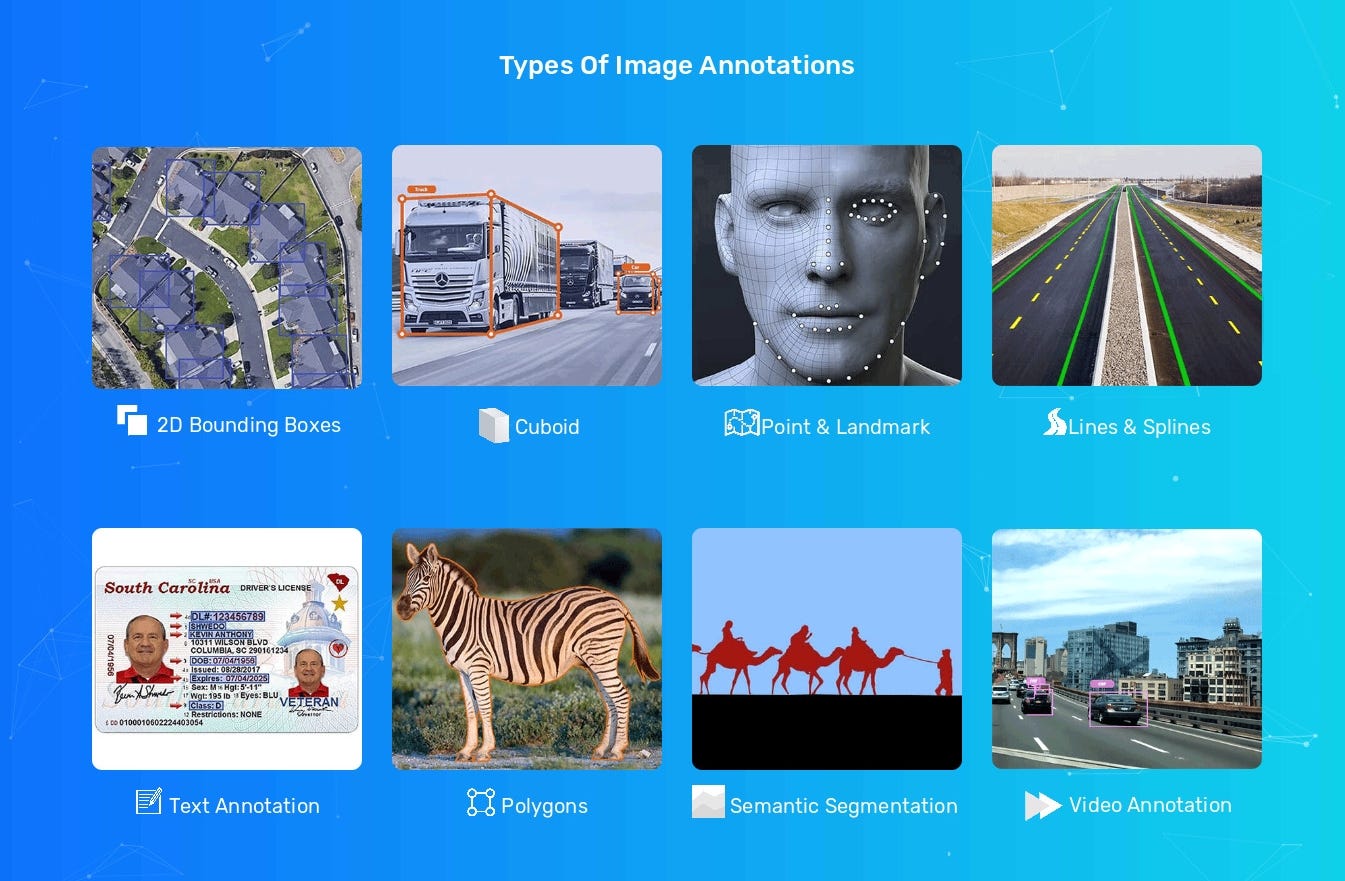

The Role of Data Annotation Services

Data annotation services play a critical role in LLM development. Accurate text annotation is the foundation on which these models are built. Text annotation services, utilizing advanced techniques like named entity recognition and sentiment analysis, provide labeled datasets that empower LLMs to understand and generate text with high accuracy.

The Imperative of Accuracy and Ethical Considerations

Accuracy is paramount in LLMs, especially when they are used for generating content or making critical decisions. Ensuring that these models deliver reliable and unbiased results is essential for their ethical and responsible use. Ethical development, including addressing biases, transparency, and accountability, is crucial for deploying LLMs ethically.

The Human Factor in LLMs

While LLMs offer immense capabilities, they are not without challenges. Balancing their potential with ethical concerns, algorithmic biases, and the potential misuse of generated content is a significant consideration. Human involvement in model development, oversight, and continuous improvement is vital to ensure that LLMs are a force for positive change.

In conclusion, Large Language Models hold incredible promise for reshaping how we interact with AI and technology. Their development requires a thoughtful, comprehensive approach that values accuracy, ethics, and the human element. By harnessing the power of data annotation services and adhering to ethical principles, we can unlock the full potential of LLMs while ensuring they serve the greater good.